Chatty Factories

The first industrial revolution saw a transformation based on water and steam power. The second harnessed electricity to support mass production. The third used electronics and IT to innovate and automate. Now, the World Economic Forum defines the fourth industrial revolution as "a fusion of technologies that is blurring the lines between the physical, digital, and biological spheres". This builds on the rapid growth of the third revolution to bring data, artificial intelligence and robotic fabrication systems to the forefront of the manufacturing industry.

Current manufacturing systems are characterised by a multitude of interconnected procedures that include many discrete, highly specialised and human intensive activities, such as: consumer research, concept design, engineering design & prototyping, and manufacturing operations that combine robots with human workers on the factory floor. Two significant limitations of current manufacturing systems are (a) the inability to quickly and continuously refine product design in response to real-time consumer insights (i.e. how the product is being used and its 'experience' of the world) and (b) the inability to quickly reconfigure and reskill the human and robotic production elements on the factory floor in response to real-time data captured from embedded product sensors. For example - if sensor data suggests a product needs a design change based on its current use, how do we update the fabrication instructions and reshuffle the factory floor between shifts, and tell human and robot workers how to alter their duties within minutes?

Our vision for the manufacturing factory of the future is to embrace the rapid growth of internet-connected products via embedded sensors producing massive volumes of data, and transform these traditionally discrete activities into one seamless process that is capable of real-time continuous product refinement. Firstly, mindful of the potential disruption to labour markets, we will develop new fundamental theory that relates to learning and seamless communication between products, humans, robots and factory floor operations - to ensure equality and collaborative real-time learning. Secondly, we will develop data-driven systems that provide an auditable, secure and seamless flow of information between all operations inside and outside the factory to facilitate real-time adaption and re-orientation of the entire manufacturing system based on data harvested via product-embedded sensors and Internet of Things (IoT) connectivity.

The research will achieve a radical interruption of the existing 'consumer sovereignty' model based around surveys and market research - and introduce 'use sovereignty' via an embedded understanding of consumer behaviour - making products that are fit for purpose based on how they are used. This will not be unmediated but buffered by robust, secure and interpretable data analysis at scale - with ethical integration of human labour. Designers will have a completely transformed role being 'embedded in production' in a world of "chatty" products and a dynamically evolving factory floor.

Our approach will transform the ways in which traditional factories are reconfigured in real time by adopting a first mover approach to real time reconfiguration, production element reskilling (human, robot or both collaboratively). Radically, we will use exopedagogy, the first time it has ever been applied in a practical setting and couple this with insights from robotics. Exopedagogy considers alien forms of learning and uses those metaphors to develop models of learning which go beyond human learning. In contrast to existing techniques such as jet engine telemetry that allow for optimisation tweaks around a clearly defined product, our work will allow for both i) redesigns to support new uses or usage patterns; ii) generation of new products based on observing alternative use.

ChattyFactories main project websitePublications

Publications involving Edinburgh staff, see the main ChattyFactories website for a full list of research outcomes.- Murray-Rust, D., Gorkovenko, K., Burnett, D., & Richards, D. (2019). Entangled Ethnography: Towards a Collective Future Understanding. Proceedings of the Halfway to the Future Symposium 2019, 1–10. New York, NY, USA: Association for Computing Machinery.

- Gorkovenko, K., Burnett, D. J., Thorp, J. K., Richards, D., & Murray-Rust, D. (2020). Exploring The Future of Data-Driven Product Design. Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, 1–14. New York, NY, USA: Association for Computing Machinery.

- Gorkovenko, K., Burnett, D., Thorp, J., Richards, D., & Murray-Rust, D. (2019). Supporting Real-Time Contextual Inquiry Through Sensor Data. Ethnographic Praxis in Industry (EPIC2019).

- Burnett, D., Thorp, J., Richards, D., Gorkovenko, K., & Murray-Rust, D. (2019). Digital Twins as a Resource for Design Research. Proceedings of the 8th ACM International Symposium on Pervasive Displays, 1–2. New York, NY, USA: Association for Computing Machinery.

- Burnap, P., Branson, D., Murray-Rust, D., Preston, J., Richards, D., Burnett, D., … Thorp, J. (2019). Chatty Factories: A Vision for the Future of Product Design and Manufacture with IoT. Living in the IoT: PETRAS/IET Conference 2019.

People

Current- Adam Jenkins

- Dave Murray-Rust

- Kami Vaniea

Funding

Research and projects here are primarily funded by EPSRC's New Industrial Systems: Chatty Factories grant.Related Student Projects

Projects by undergraduates, masters, and intern students that explore topics related to the Chatty Factories project. Projects are usually inspired by the main project research but each project is the student's own.

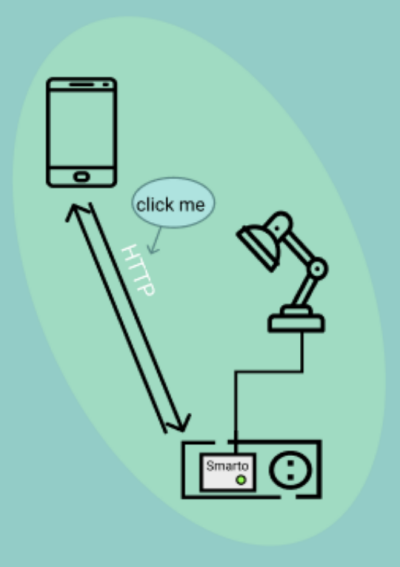

Analysis of Network Traffic to Create an Educational Visualisation of the IoT Ecosystem

Anna Aloshine

Supervisors: Kami Vaniea, Nicole Meng

Assist the general public in understanding how IoT devices communicate within the home by using visualizations; particularly how they interact with other devices such as phones, routers and hubs like Alexa. The project collected packets from a real IoT device and then used the real packet flows to generate a set of scenarios and visualizations that walk a user through what the device is doing.

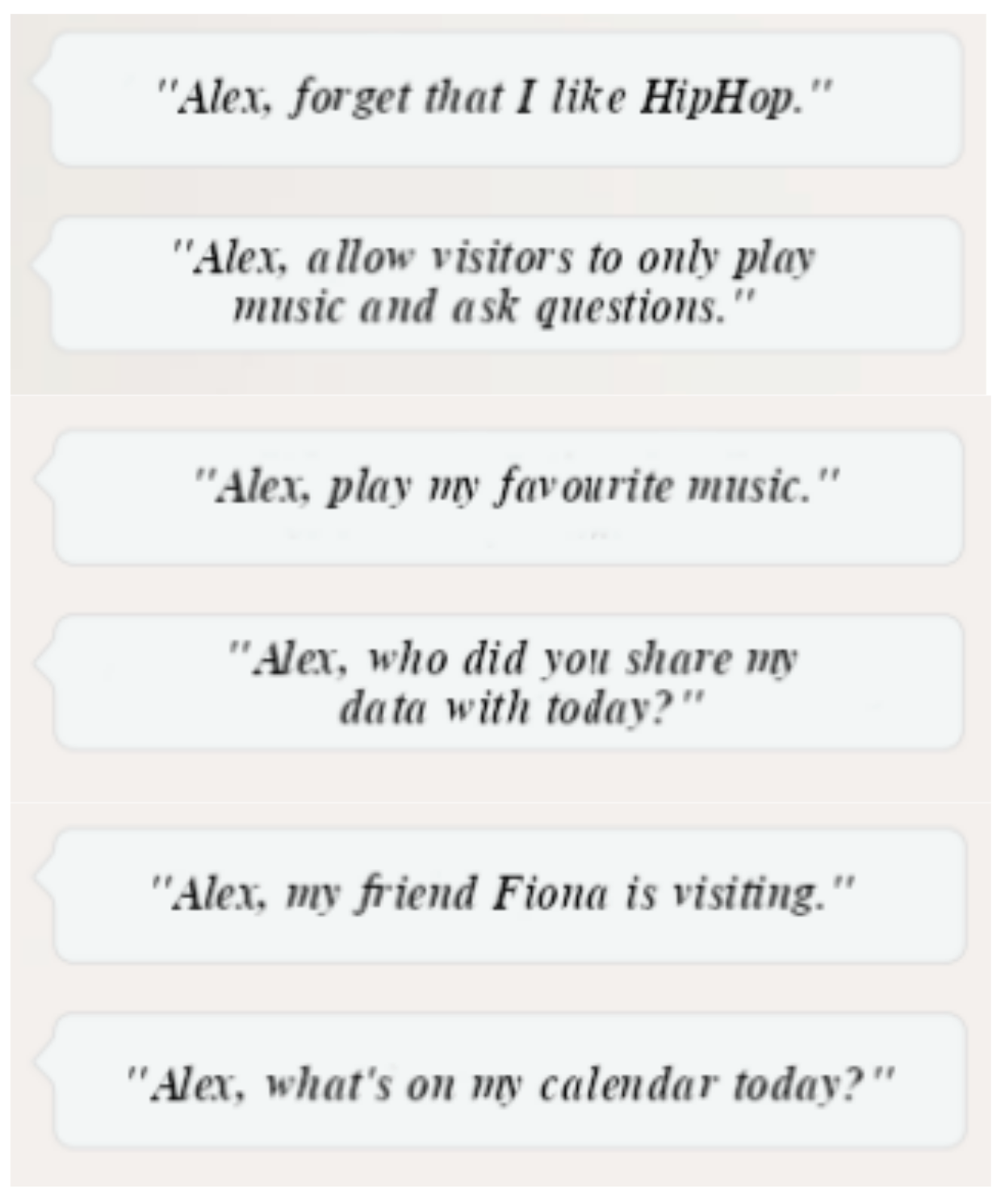

Of Smart Speakers and Men - An Exploration of Privacy and Security Perceptions of Smart Speaker Users in Shared Spaces

Nicole Meng

Supervisors: Kami Vaniea, Bettina Nissen

Smart speakers increasingly adopted into our everyday life. Sometimes, they are also placed in shared spaces and automatically turn every person in the room into a user (visitor) even if they do not regularly interact with it. Previous work primarily focuses on smart speaker adoption and owners, but does not consider the implications of smart speakers on visitors. Our research aims to determine differences between owners and visitors in mental and threat models, privacy perceptions, protection strategies, factors of discomfort. Also, we want to identify which areas of smart speakers need to be addressed to improve smart speaker interactions for both owners and visitors.